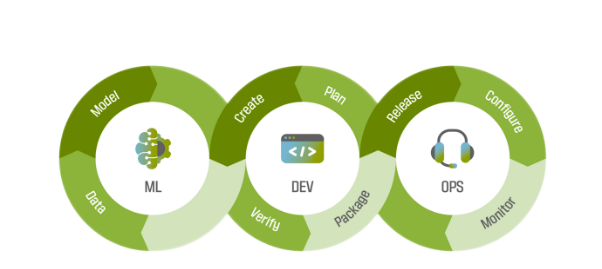

In the dynamic world of software development, two powerful approaches have emerged to streamline processes and improve collaboration: DevOps and MLOps. Although they share common goals, each has its own unique focus and application.

In my blog, I want to talk more about the work of an MLOps specialist and show the main differences between DevOps and MLOps.

History and evolution

DevOps emerged in the late 2000s when organizations sought to revolutionize software development and deployment. The main goal was to shorten development cycles and accelerate the delivery of software, while improving its quality and reducing costs. DevOps promoted close collaboration between development teams and operations professionals, seeking to break down barriers and streamline processes.

Around 2016-2017, with the rise in popularity of machine learning, MLOps (Machine Learning Operations) came into focus. MLOps applies DevOps principles to machine learning tasks: model reproducibility, scalability, and governance. It takes into account the dynamic and intensive nature of machine learning and aims to optimize the end-to-end lifecycle of machine learning models.

Understanding of DevOps and the software development lifecycle

At the heart of DevOps is the Software Development Life Cycle (SDLC), which includes several stages that require close collaboration between development teams and operations professionals.

- Planning and requirements. At this stage, requirements are collected, software functionality is planned, and the overall architecture is designed. DevOps ensures feasibility and a smooth deployment process by involving operations teams early on.

- development Developers write code using techniques such as version control, continuous integration, and automated testing. The goal is to catch bugs early, improve code quality, and ensure stable and reliable software.

- Testing. DevOps prioritizes rigorous testing at various levels: unit testing, integration testing, and user acceptance testing. Automated testing and continuous feedback loops help identify and fix problems quickly, reducing the time and effort required for manual testing.

- Deployment. DevOps introduces the automation of the deployment process. Techniques such as containerization and Infrastructure as Code (IaC) enable consistent, reliable, and reproducible deployments across environments.

- Monitoring and operations. Continuous post-deployment monitoring helps identify performance issues, errors, and exceptions. Operations teams work closely with developers to solve problems, and feedback is used to improve during the planning phase, ensuring continuous improvement.

Adopting DevOps practices brings significant benefits, including reduced time to market, improved software quality, and lower costs.

By breaking down barriers and improving collaboration, organizations can respond more quickly to market changes and customer needs. DevOps enables efficient software delivery, ensuring fast and reliable delivery of high-quality software to users.

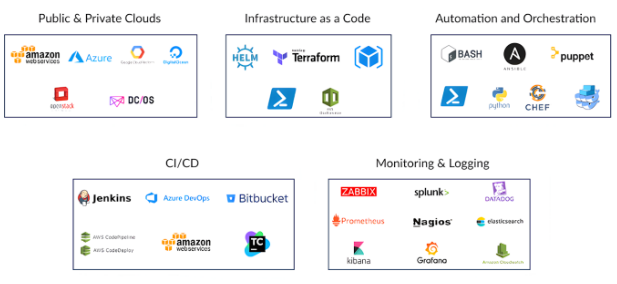

DevOps technology stack

- Version control: Git (eg GitHub, GitLab) to track code changes and facilitate collaboration.

- Continuous Integration/Continuous Delivery (CI/CD): Jenkins, Travis CI, or AWS CodePipeline to automate the process of building, testing, and deploying code changes.

- Containerization: Docker for packaging applications and their dependencies into isolated containers, providing a consistent and reproducible environment.

- Infrastructure as Code (IaC): Terraform or AWS CloudFormation to define and manage infrastructure as code for repeatable and scalable deployment.

- Monitoring: Tools like Amazon CloudWatch, Splunk, or Prometheus to track application performance, identify issues, and capture valuable data.

- Collaboration: Slack or Microsoft Teams to communicate, share knowledge and improve team collaboration.

Let’s look at examples of the use of this technology stack by a software development company and how our collaboration helped improve their processes.

Git, in particular GitHub, has become an indispensable tool for effective code change management and project collaboration. Our team helped them migrate all their code and workflow from GitLab to GitHub, ensuring a smooth transition and taking advantage of the additional benefits and features that GitHub offers.

Jenkins plays a key role in automating their build and deployment processes. Now their team can focus on development, knowing that their continuous integration and continuous deployment processes are working efficiently.

Docker containers ensured consistent deployment of their application across different environments. This simplifies the setup process and ensures portability and isolation of their application. In addition, they use Kubernetes to orchestrate and manage containers, allowing them to efficiently scale and manage the performance of their applications in cloud environments.

Terraform helped them automate their cloud infrastructure management. Amazon CloudWatch provides comprehensive monitoring data that helps you quickly identify and resolve performance issues.

MLOps: Focusing on the Machine Learning Lifecycle

MLOps represents a specialized lifecycle tailored to the unique requirements of machine learning models.

- Data collection and preparation. At this stage, the focus is on data management and traceability. It involves identifying data sources, pre-processing data and ensuring their quality. MLOps focuses on building reliable data pipelines and solving data-related problems such as bias and missing data.

- Development and training of models. Data scientists experiment with different algorithms and techniques to build and train models. MLOps encourages the use of model registries and version control systems such as MLflow or Amazon SageMaker to effectively track and manage models, ensuring reproducibility and facilitating collaboration.

- Deployment of the model. MLOps automates the deployment process, ensuring consistency and scalability. Containerization, microservices, and tools like Amazon SageMaker or Kubernetes help package and deploy models as reusable components, making it easier to manage and update deployments.

- Model monitoring and retraining. Continuous monitoring reveals performance issues, model drift, and data quality issues. MLOps uses monitoring solutions such as Amazon SageMaker Model Monitor to detect anomalies and initiate retraining when necessary, ensuring models are accurate and reliable.

- Model management and decommissioning. MLOps includes practices for documenting model performance, ensuring compliance with regulatory requirements such as GDPR or HIPAA, and managing model risks. When a model becomes obsolete, MLOps provides a safe framework for decommissioning and replacing it.

MLOps brings the benefits of DevOps to the world of machine learning. It enables faster experiments and enables data scientists to focus on research and innovation. MLOps also ensures data management and regulatory compliance, reducing legal and ethical risks.

By simplifying the machine learning lifecycle, MLOps helps organizations deploy robust and scalable models, increasing overall efficiency and reducing time to market for ML initiatives.

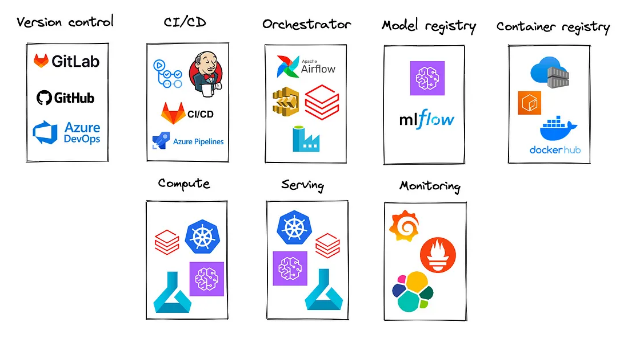

The MLOps technology stack

- Data Management: Tools like Amazon SageMaker Data Wrangler or Apache Spark for big data processing, and Pandas for data processing and analysis.

- Model development: Python libraries such as TensorFlow, PyTorch or Scikit-learn for building and training models, often integrated with Amazon SageMaker for scalable training.

- Model Registry and Versioning: MLflow or Amazon SageMaker model registry to track and manage models, ensure reproducibility, and facilitate collaboration.

- Containerization: Docker for packaging models and their dependencies, enabling consistent deployment across environments.

- Deployment: Kubernetes or Amazon SageMaker endpoints to orchestrate and scale model deployments, simplifying traffic and update management.

- Monitoring: Amazon SageMaker Model Monitor, Prometheus, and Grafana to track model performance, detect anomalies, and trigger alerts.

- Model serving: Amazon SageMaker hosting or TensorFlow serving for deploying models in production for high availability and scalability.

Let’s take a look at the elements of this technology stack and how one of our customers developing a fraud detection model is using them to improve their processes.

Amazon SageMaker, a powerful machine learning platform, provides various tools for model development and deployment. Specifically, they use SageMaker Data Wrangler for efficient data preparation, simplifying the process of processing and preparing data for model training. PyTorch, a well-known machine learning framework, is used to develop the fraud detection model itself.

Amazon SageMaker Model Registry allows them to efficiently manage model versions. They can store, track and deploy different iterations of their model, ensuring control and reproducibility.

SageMaker Pipelines simplifies the process of orchestrating and automating the entire machine learning workflow. They can define the sequence of steps, from data preparation to model training and deployment, creating a continuous and automated process.

Amazon SageMaker Model Monitor, integrated with popular monitoring tools such as Prometheus, provides powerful monitoring capabilities. They can monitor model performance in real-time, receive alerts on anomalies, and ensure that the model meets expectations.

Using Grafana, a visual dashboarding tool, allows the team to visualize model performance data collected by Prometheus. This allows them to quickly identify anomalies, track key metrics, and make informed decisions.

Similarities and key differences

Similarities

MLOps and DevOps focus on people, process and technology, promoting collaboration and automation. Both approaches encompass ongoing practices including continuous integration, delivery/deployment, and code/model performance monitoring. They help improve collaboration and communication, removing barriers to a more efficient and effective organization.

A key difference

The main difference lies in their focus. DevOps deals with the delivery and maintenance of traditional software, with a focus on code releases and infrastructure management. MLOps, on the other hand, specifically addresses the unique challenges and lifecycle of machine learning models, emphasizing data management, model versioning, and adaptation to dynamic changes in data and algorithms.