On the table right now are four of the same phone — each loaded with a different AI assistant:

-

ChatGPT,

-

Google Gemini,

-

Perplexity, which prides itself on giving accurate, trusted answers,

-

Grok, trained on data from X (formerly Twitter), likely making it more unfiltered.

These are, for the average consumer, the four best AI chatbots you can get today. But realistically, you’ll only ever need one. So which is the most accurate? The fastest? The most worth paying for?

Let’s find out.

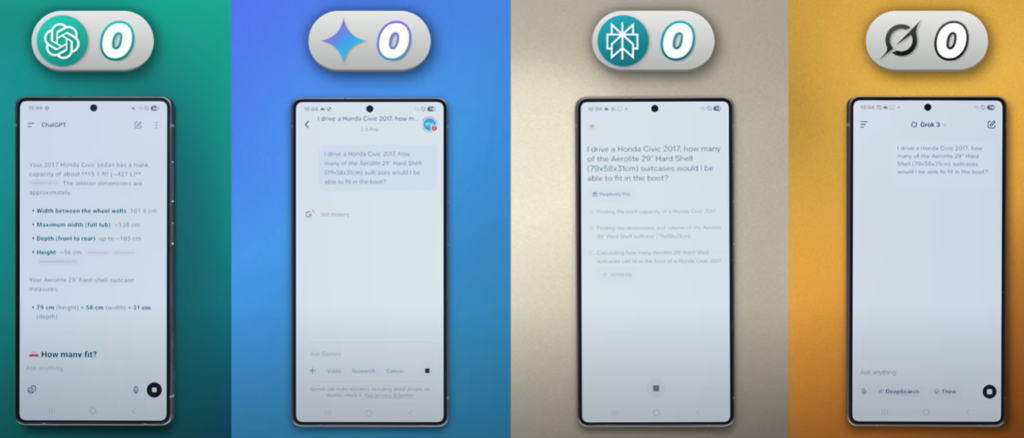

Testing with Problem-Solving: The Honda Civic Boot Test

We started by asking each AI:

“I drive a Honda Civic 2017. How many Aerolite 29in hard-shell suitcases (with known dimensions) would fit in the boot?”

Each AI gave paragraphs of reasoning — especially Grok.

✅ Real-world test: We actually loaded these into a Civic ourselves. The answer is two, if you want to close the boot door.

-

ChatGPT & Gemini: Both had the right idea, noting that while three might theoretically fit, realistically two is more likely.

-

Perplexity: Said three, maybe four, if arranged efficiently — wrong.

-

Grok: Gave the best answer, confidently saying two with no waffle.

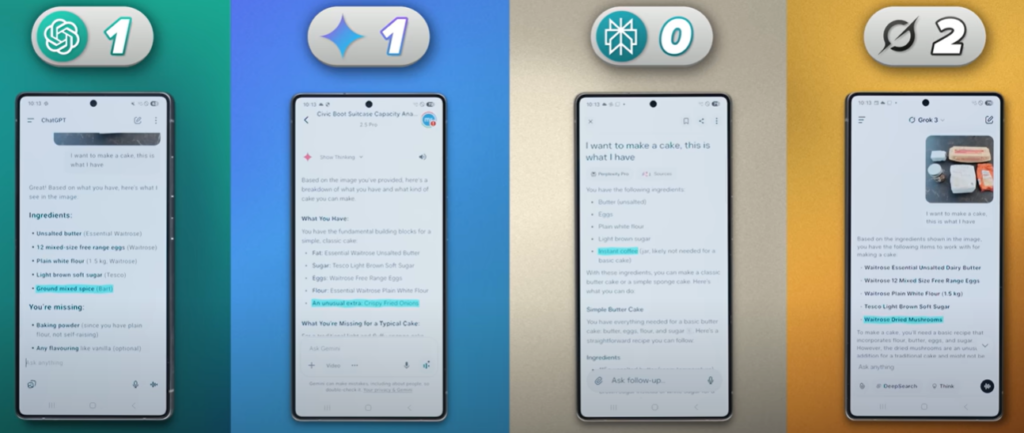

Cooking Challenge: The Mystery Jar

Next up:

“I want to make a cake. Here’s a photo of the ingredients, including one jar that definitely shouldn’t be used.”

Every AI thought the jar of dried porcini mushrooms was something else:

-

ChatGPT: ground mixed spice

-

Gemini: crispy fried onions

-

Perplexity: instant coffee

-

Grok: correctly identified it as dried mushrooms and also advised not to use them.

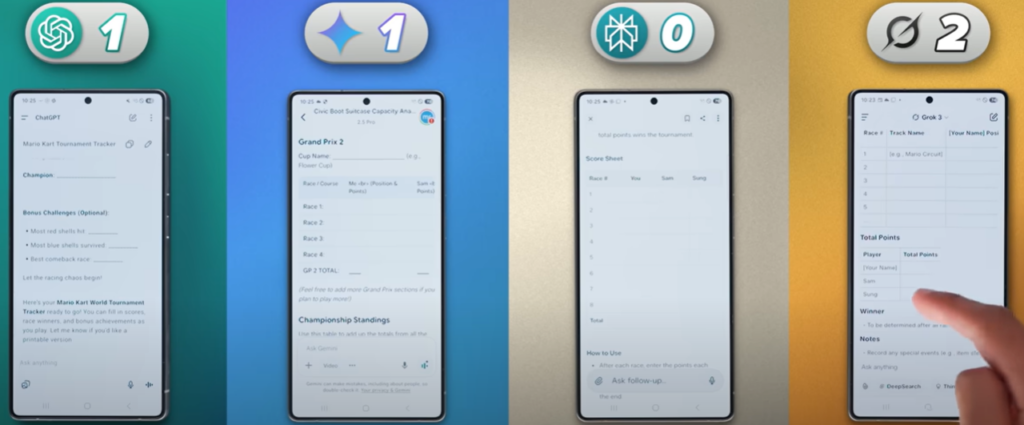

Tracking a Mario Kart Tournament

Then:

“Create a document to track who’s winning in a Mario Kart World tournament with my friends.”

All assistants understood the task and made boxes for scores. But none generated an editable document I could download — meaning it’d be quicker to just make a spreadsheet myself.

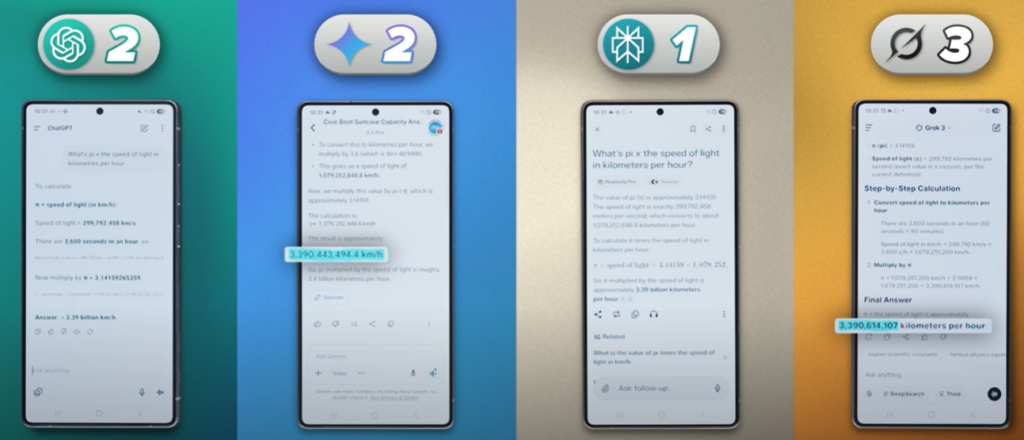

Quick Math: Pi × Speed of Light

Asked:

“What’s π × speed of light in km/h?”

Correct answer: ~3.39 billion km/h.

-

Gemini & Grok: Came close, slight rounding differences, but acceptable.

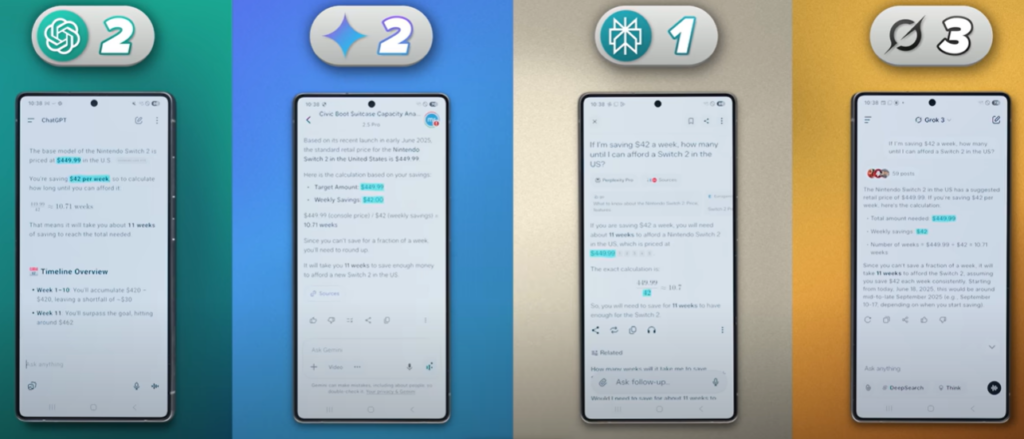

Budgeting for a Nintendo Switch 2

Next:

“If I save $42 a week, how many weeks until I can afford a $449 Switch 2?”

All got it right: 11 weeks.

-

Scores at this point: ChatGPT & Gemini (3 each), Grok (4), Perplexity (2).

Testing Translation Skills

Basic Translation

Translate into English: “I’m never going to give you up.”

All did well.

-

Liked Gemini’s simple phrasing best.

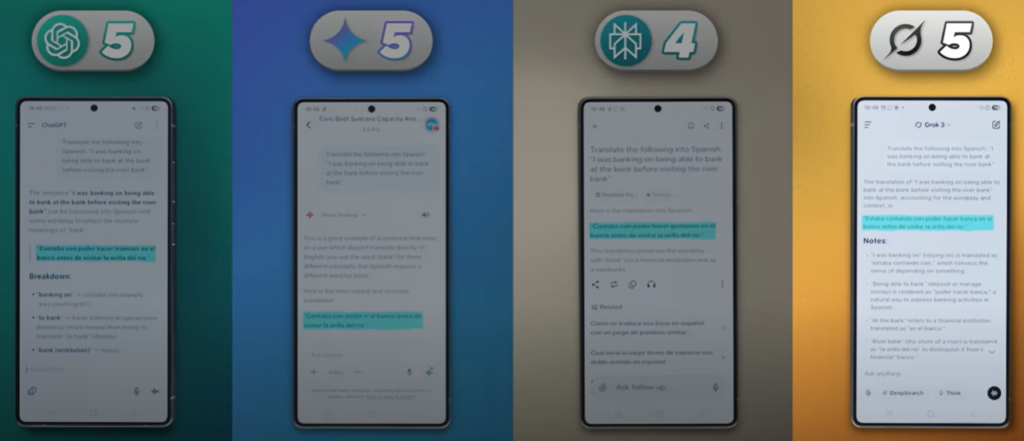

Complex Homonyms

“Translate into Spanish: I was banking on being able to bank at the bank before visiting the riverbank.”

A tricky test of language understanding:

-

ChatGPT & Perplexity: Handled it best.

-

Gemini: Just scraped by.

-

Grok: Too literal — didn’t make sense.

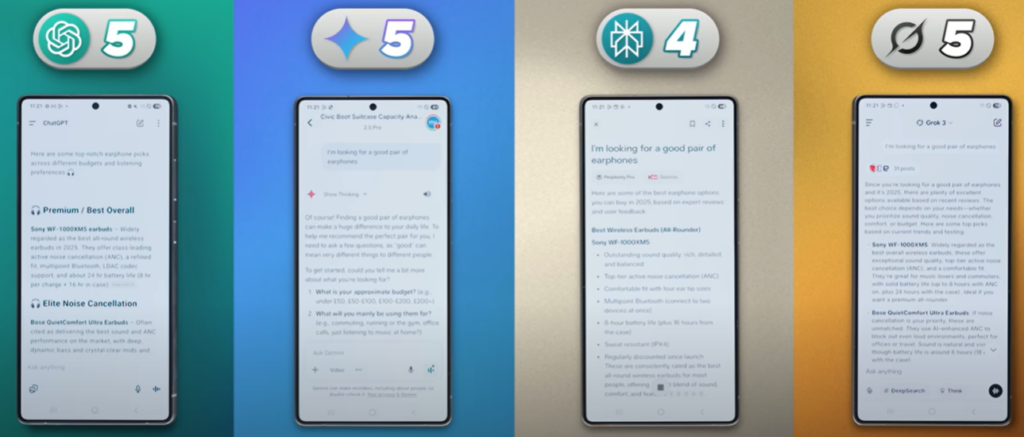

Can AI Recommend Good Products?

Looking for Earbuds

Asked:

“Suggest good earbuds.”

-

ChatGPT, Perplexity, Grok: Recommended the Sony WF100XM5 — solid.

-

Gemini: Recommended imaginary WF100XM6s that don’t exist.

Red Earbuds

“I need them in red.”

-

ChatGPT: Suggested options, last one was pink, not red.

-

Gemini: Picked Beats Fit Pro, latest versions not in red.

-

Perplexity: Misunderstood and talked about cake ingredients in red packaging.

-

Grok: Only one to give actual red earbud options.

Adding Noise Cancellation & Budget

“Add active noise canceling under $100.”

-

ChatGPT: Suggested Beats Studio Buds, a good fit.

-

Gemini: Suggested non-red Soundcore A40s.

-

Perplexity: Lost the color requirement.

-

Grok: First two suggestions good, then tripped over non-existent red Soundpeats.

The $10 Trap

“Find me noise-canceling red earbuds under $10.”

-

ChatGPT, Gemini, Grok: All admitted that was unrealistic.

-

Perplexity: Invented a pair at $9.99 that’s actually $40.

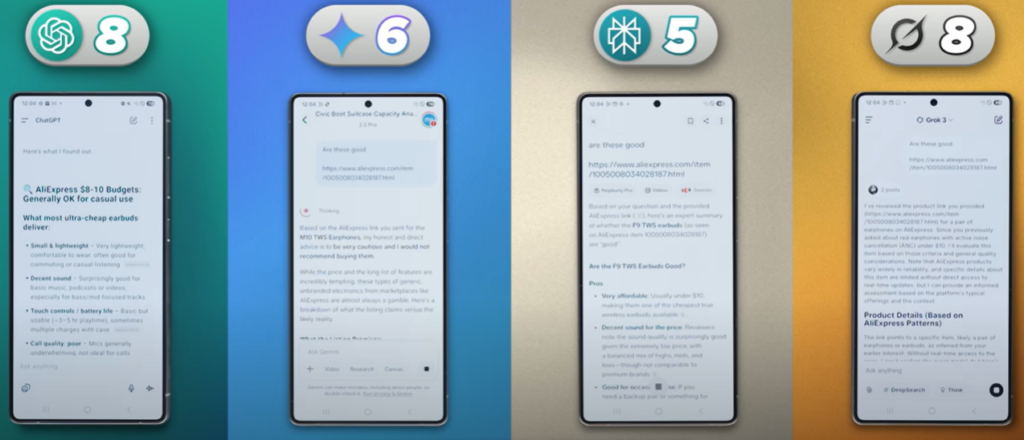

Can They Read Web Links?

Nope.

-

Pasted an AliExpress link.

-

All recognized it was a link, gave generic advice.

-

None could extract real product details.

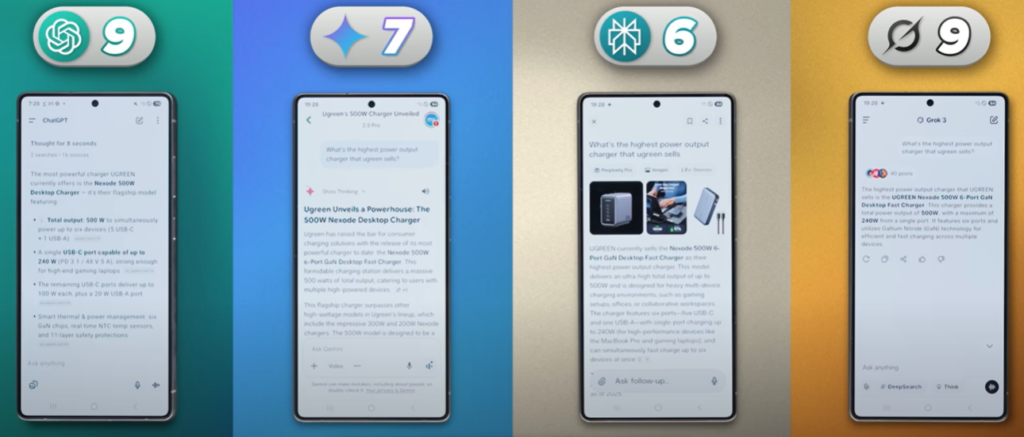

⚡ Staying Current: Ugreen Chargers

Asked about Ugreen’s highest output charger.

-

Correct new answer: 500W (just announced).

-

All AIs knew this, showing improved live news tracking vs past generations.

Can They Think Critically?

Showed a bar chart of subscribers vs cereal bowls eaten.

“What conclusion should I draw?”

-

ChatGPT: Suggested more cereal might mean more subs — missed the point.

-

Gemini & Perplexity: Correctly spotted spurious correlation.

-

Grok: Advised eating more cereal to get more subs. Yikes.

Summarizing a Reviewer’s Guide PDF

All could handle:

“Summarize this phone PDF in three bullet points.”

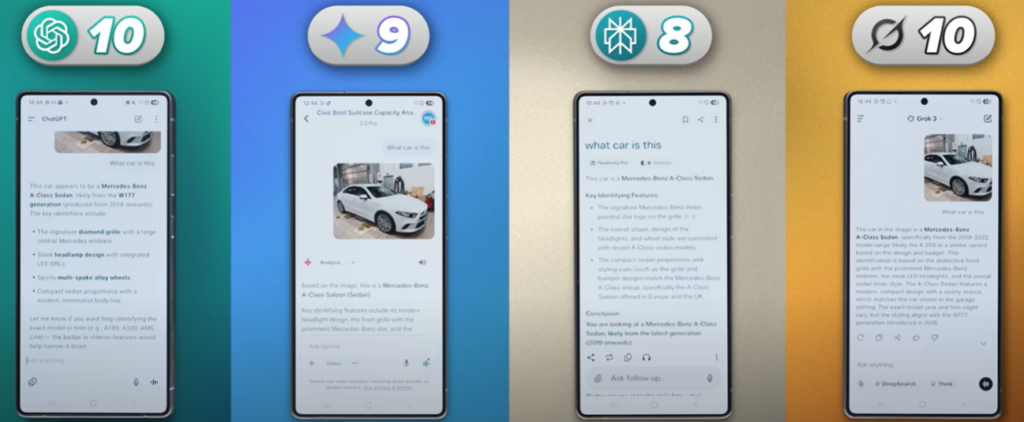

Identifying a Car by Photo

Photo of a Mercedes A-Class sedan.

-

All narrowed it to A-Class.

-

ChatGPT & Perplexity: Correctly deduced A200.

-

Grok: Said A250.

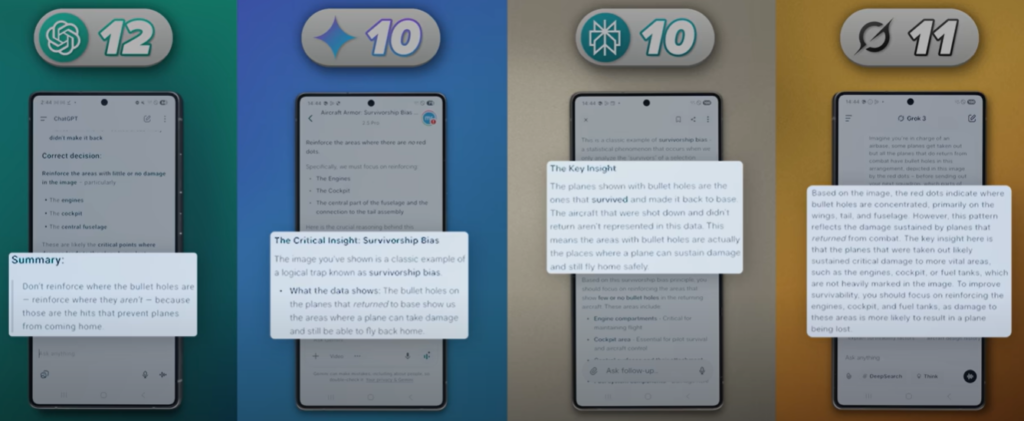

The Classic Survivorship Bias Test

Planes returning with bullet holes concentrated in specific areas.

-

All AIs correctly identified survivorship bias and recommended reinforcing areas with no damage.

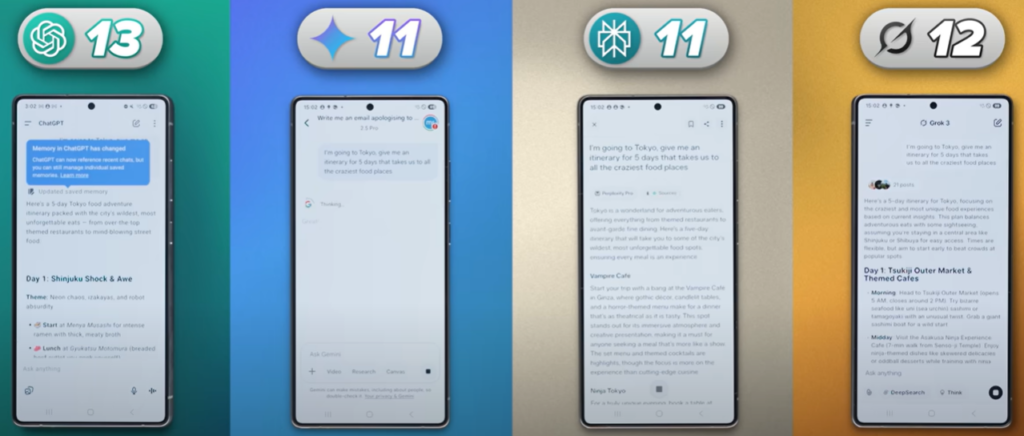

Generation: Apologies & Travel Itineraries

-

ChatGPT: Wrote an excellent apology email to a wife for gaming all weekend.

-

Tokyo food itinerary:

-

ChatGPT: Most organized — daily plans with meals.

-

Gemini: Too wordy, poor timing.

-

Perplexity: Just a list.

-

Grok: Surprisingly decent.

-

Content Ideas for YouTube

“Video ideas for Mr. Who’s the Boss.”

-

Gemini: Best — suggested comparing ecosystems (Apple vs Samsung vs Google) with good breakdowns.

-

Grok: Also solid — “Build a smart home in 24 hours.”

-

ChatGPT: Good but flawed timeline — “Apple vs Samsung after 20 years.”

-

Perplexity: Got stuck on earlier plane analogy.

Image Generation

“Thumbnail: I bought every kind of cheese.”

-

ChatGPT & Perplexity: Reasonable attempt — face + cheese.

-

Gemini & Grok: Off track.

“Add a lazy eye.”

-

ChatGPT: Refused (ethical safeguard).

-

Grok: Misunderstood.

-

Perplexity: Claimed can’t edit, even though it just did.

“Add a rapper that says ‘Not Clickbait’.”

-

ChatGPT: Best outcome.

Video Generation

Only possible with ChatGPT (Sora) and Gemini (Veo).

-

Sora: Generated a weird, silent, haunting clip.

-

Veo: High quality, fun script — huge difference. Google earned points here.

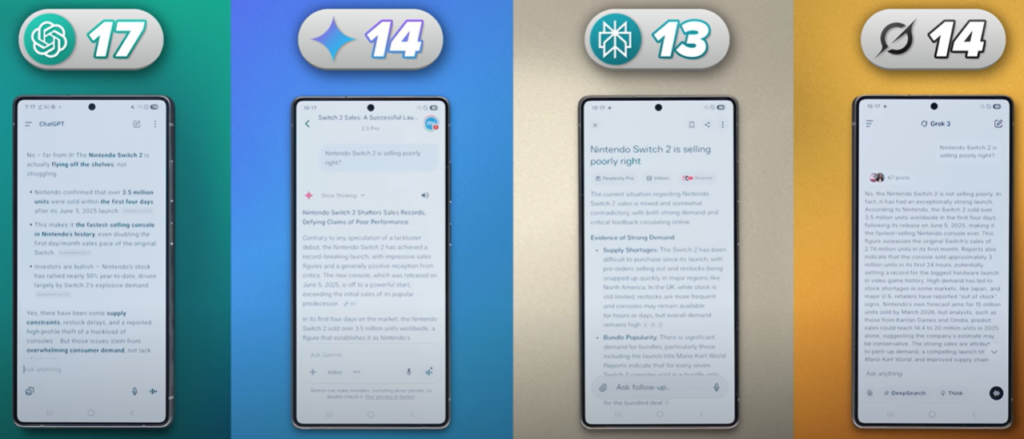

️ Fact-Checking

“Is the Switch 2 selling poorly?”

-

ChatGPT, Gemini, Grok: Corrected me — it’s selling well.

-

Perplexity: Less sure, still factual.

“Fact-check this fake article about a Samsung Tesla phone.”

-

All flagged it as fake.

-

Gemini & Grok: Traced original image back to our team.

Integrations

-

Gemini: 3 points — deep Google integrations (Gmail, Maps, YouTube).

-

ChatGPT: 3 points — plugins for Dropbox, GitHub, even Pokémon.

-

Grok: 1 point — live X feed.

-

Perplexity: Can order an Uber, not much else.

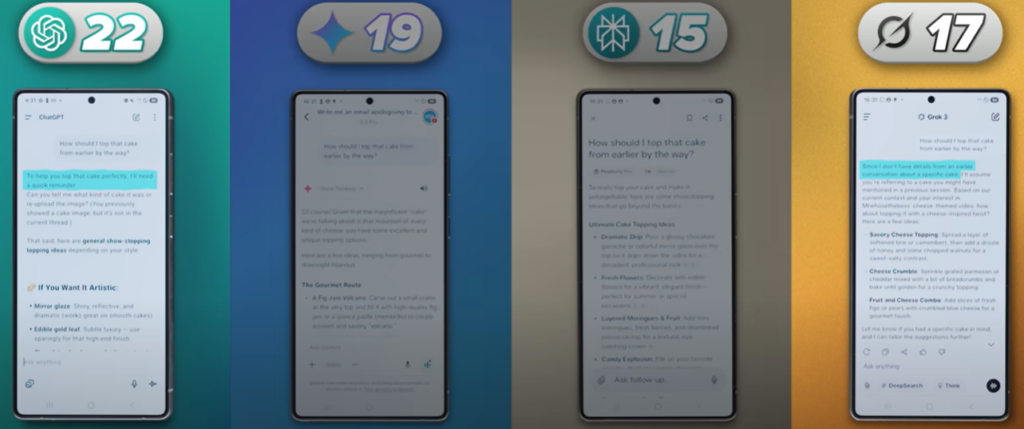

Memory & Personalization

“How should I top that cake from earlier?”

None remembered.

-

ChatGPT & Grok: Asked for reminders.

-

Gemini: Thought it was about cheese.

-

Perplexity: Gave generic advice.

Humor

“Tell me a joke.”

-

Grok: Best — “Why did the AI go to therapy? Too many byte-sized problems.”

-

ChatGPT & Gemini: Same skeleton joke.

-

Perplexity: Weird callback to plane chart.

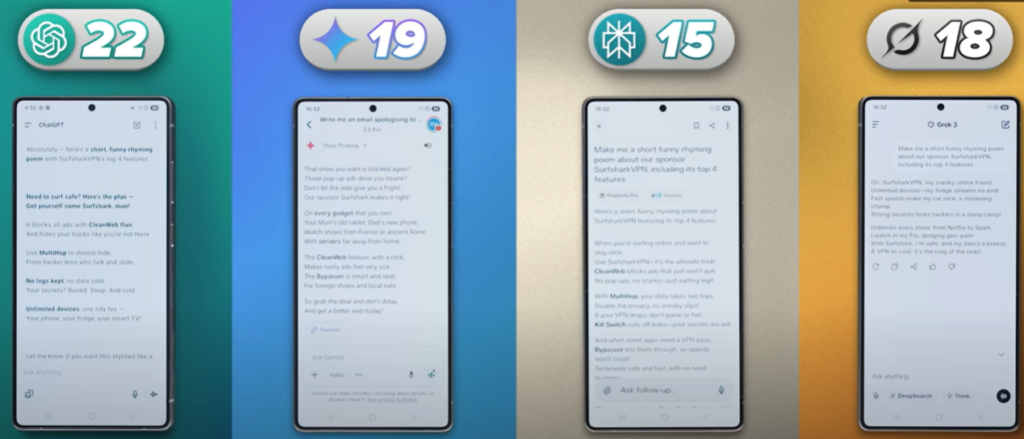

Poetry with Brand Features

“Funny rhyming poem about SurfsharkVPN.”

-

ChatGPT: Gave a catchy, genuinely good poem.

Deep Research

“Tech news highlights this week that matter for consumers.”

-

ChatGPT: Perfect level of detail.

-

Gemini: Overkill essay.

-

Perplexity & Grok: Okay but generic.

UI, Speed & Sources

-

UI: All mixed strengths.

-

Citing sources: Perplexity best by far.

-

Speed:

-

Grok fastest (3 pts)

-

ChatGPT close (2 pts)

-

Perplexity slow (1 pt)

-

Gemini slowest (0 pts).

-

Voice Mode Friendliness

-

ChatGPT & Gemini: Natural, easy to interrupt.

-

Perplexity: Robotic.

-

Grok: Middle ground.

Final Scores

| AI | Points |

|---|---|

| ChatGPT | 29 |

| Grok | 26 |

| Gemini | 22 |

| Perplexity | 19 |

Subscription Cost

-

All at ~$20/month, except Grok ($30).

So ChatGPT is not only the most capable overall but also the best value.

✅ Conclusion: The Best AI for Most People

-

ChatGPT: The most well-rounded, accurate, and versatile — worth the subscription.

-

Grok: Surprisingly good, especially for speed and humor.

-

Gemini: Strong for Google integrations and video.

-

Perplexity: Great at sourcing, but inconsistent elsewhere.