If you are working with a site that is gradually growing – the number of products increases, traffic from advertising – then sooner or later you will have to switch to high-load operation mode, high server loads. But what if your site is not growing, and the server is increasingly failing, and data blocking occurs? Such a problem appeared for the team of an online store of lighting equipment with an assortment of more than 100,000 products.

Initial situation

The project was located on a server that had enough resources to ensure fast and smooth operation of the site, even under very high loads. However, the server did not respond to user actions or responded very slowly, as soon as the site traffic increased even slightly.

Finding the problem

An audit of the server and site settings was carried out, dividing the work into two stages: analysis of the back-end and front-end, and found a low page load speed on the back-end – about 80 seconds on the most visited pages, which ultimately led to a significant decrease in conversion.

Found out that the main problem was a misconfigured cache and database configuration.

As a result, a four-step action plan was drawn up, which helped to achieve good results. What has been done?

Decision

Step 1. Database setup

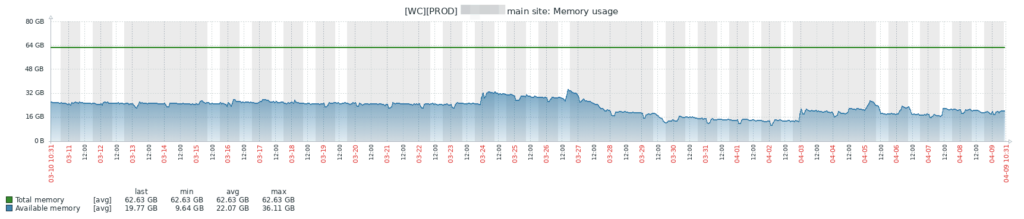

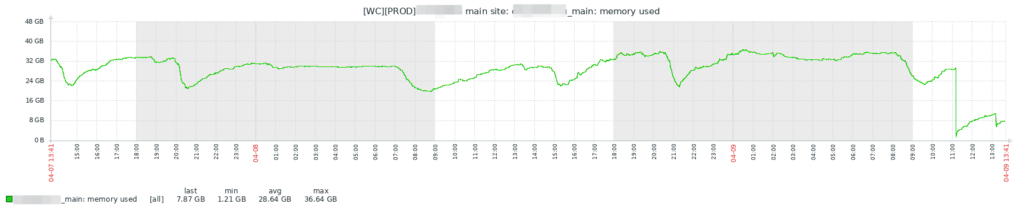

At the first stage, the MySQL database was configured without changing the storage systems, based on the available resources and the project load. These actions, first of all, were aimed at optimizing the consumption of RAM resources, which made it possible to avoid leaving the server in SWAP, when, having exhausted RAM resources, the server started working from the paging file and slowed down the site.

Step 2. Changing storage type to InnoDB

Why was InnoDB chosen?

InnoDB stores data in large shared files, as opposed to previously used MyISAM, which creates a separate data file for each specific table. InnoDB provides data storage reliability through row-level and transactional data locks.

The main advantage of InnoDB is the speed of work – when executing a query to the InnoDB database, only a row is locked, while when executing a query to the MyISAM database, the entire table is locked. The point is that until the query is executed, no other access to the table/row will be possible. And because rows are significantly smaller than entire tables, InnoDB is faster at processing queries.

The InnoDB database itself has also been optimized. For example, the parameters have been optimized:

# InnoDB parameters

innodb_file_per_table

innodb_flush_log_at_trx_commit

innodb_flush_method

innodb_buffer_pool_size

innodb_log_file_size

innodb_buffer_pool_instances

innodb_file_format

innodb_locks_unsafe_for_binlog

innodb_autoinc_lock_mode

transaction-isolation

innodb-data-file-path

innodb_log_buffer_size

innodb_io_capacity

innodb_io_capacity_max

innodb_checksum_algorithm

innodb_read_io_threads

innodb_write_io_threads

Intermediate results

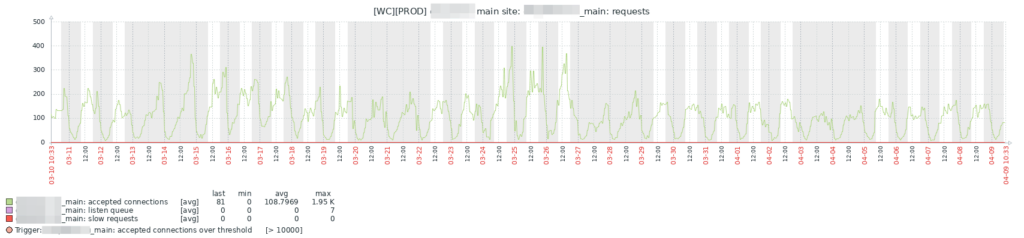

After completing steps 1 and 2, the number of simultaneous connections to the webserver decreased as database requests and connections were processed faster.

This, in turn, led to a decrease in the consumed RAM.

Step 3. Reconfiguring Nginx and installing brotli, pagespeed, proxy_buffering caching modules

Nginx is marketed as a simple, fast, and reliable server that is not overloaded with features. To improve performance when using additional servers, Nginx supports buffering (proxy_buffering) and caching (proxy_cache), which have been used.

Not without the curiosities of the Nginx settings. The client had a regular online store with goods, while the buffering settings, which were discovered during the audit, allowed him to be almost a streaming service. The values in the client_max_body_size parameter have been significantly reduced, which, together with the Nginx reconfiguration, further reduced memory consumption.

Step 4. Optimizing PHP-FPM and Memcache Settings and Disabling Apache

PHP-FPM is often used in conjunction with the Nginx web server. The latter processes static data, and the processing of scripts is given to PHP-FPM. This implementation is faster than the common Nginx + Apache model.

Apache request processing speed is lower. For example, Apache has to read multiple configuration files on the server each time, wasting system resources and time. As a result, it was decided to simply turn off Apache, which did not serve anything but only consumed resources.

The necessary step was the translation of PHP-FPM to unix socket. Why was it needed? Nginx itself is a fairly fast web server, however, it cannot handle scripts on its own. This requires a PHP-FPM backend. To make this whole bundle work without losing speed, a unix socket was used – a way to connect to PHP-FPM, which allows you to avoid network requests and gives a significant increase in the speed of the site.

Work results

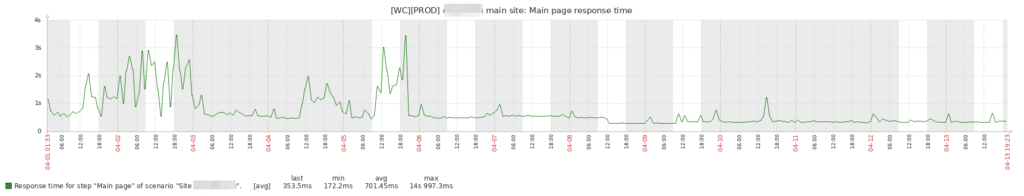

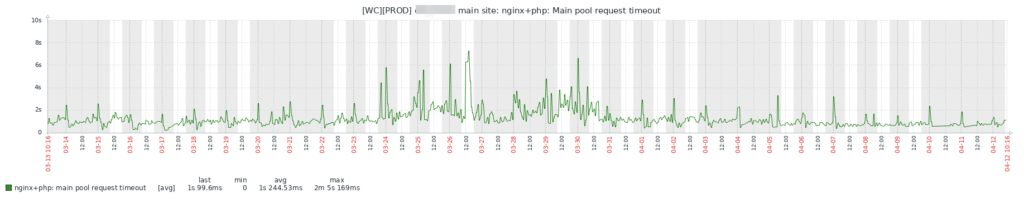

1. The response time of the main page has decreased from 24 seconds to just over 3 seconds, internal to 5-8 seconds.

2. The consumption of server resources has decreased.

3. Server behavior stabilized – it stopped freezing.

4. The depth of views increased by 30%, and as a result, this gave an improvement in SEO, as well as subsequent sales: behavioral indicators grow => site positions in the search results grow => traffic grows => sales grow.

5. The client was given recommendations on how to optimize the front-end part of the site to speed up the site. For example:

- optimize graphics and settings for rendering images in webp format;

- configure lazyload data loading;

- move all scripts that are not critical for displaying the page to the end of the page.

Output

The site was speeded up and loading issues were fixed without changing the code. The speed of the site affects many indicators: from user-friendliness to the ranking of the site in the search results, which ultimately affects the conversion.