Artificial Intelligence is undoubtedly the hottest topic in technology right now. AI tools are being developed for every sector, including various IT fields. Within the specific context of the DevOps and Cloud worlds, it is essential to look beyond the hype and examine how these tools actually function in practice.

Rather than simply comparing random popular AI tools, this article focuses on specific categories—such as monitoring, security improvement, and resource management—to provide a structured overview. The insights shared here are based on honest, practical experience using these tools in actual DevOps projects, reflecting both their potential and their current limitations. As always, the philosophy remains “concepts before tools,” so the examples provided illustrate broader engineering principles.

The Promise vs. The Reality of AI in DevOps

DevOps is fundamentally about automation and efficiency. Whether the goal is releasing a new feature quickly, securing infrastructure, or monitoring a platform to prevent issues, the objective is to automate these processes.

Imagine a scenario where AI takes over tedious, repetitive tasks, helps automate workflows, and assists engineers in making data-driven decisions. This vision includes super-fast code validations, proactive support for Cloud infrastructure or Kubernetes clusters, automatic detection of security threats, and rapid incident response—all driven by the power of AI.

If this sounds too good to be true, that is because, currently, it largely is. Most AI tools are not yet mature enough to be used on “autopilot.” They must be used like any other tool: they cannot do everything automatically, and many still require significant human review and validation. However, this does not render them useless. There are compelling use cases for AI tools right now.

While general-purpose tools like ChatGPT are well-known, they have limitations when applied to the specific, complex integrations required in DevOps. DevOps involves integrating various tools to build automated processes—such as automating monitoring, notifying teams of abnormal behavior, detecting security issues, and provisioning infrastructure.

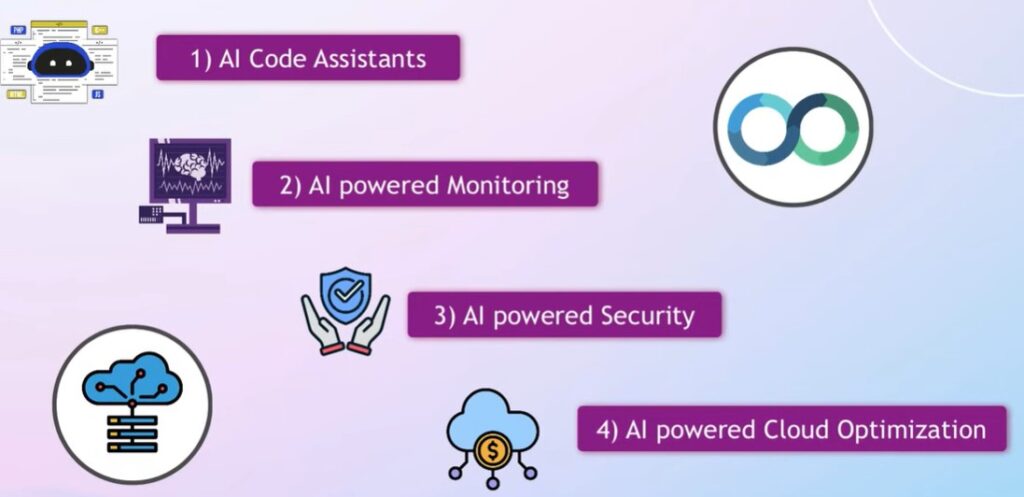

Here are the key categories where AI is making an impact in the DevOps and Cloud space.

1. AI Code Assistants

The primary use case for AI code assistants in this field is writing Infrastructure as Code (IaC), configuration files, and scripts.

A popular example is GitHub Copilot, alongside similar tools like Amazon Q. These tools function as assistants within your code editor or IDE, offering:

-

Code Suggestions and Completions: Predicting what you want to code based on the current context.

-

Natural Language to Code: You can type logic in plain English, and the tool generates the respective code block.

-

Refactoring: Asking the assistant to clean up “dirty” code or find duplications.

-

Code Explanation: This is a particularly interesting use case for learning. For example, a junior engineer trying to understand a complex Terraform codebase can use an AI assistant to explain the logic, effectively using the tool to upskill.

The Limitation

Based on practical experience in DevOps projects, the code generated by these assistants is usually not entirely usable out of the box. You still need to validate the output, and in many cases, fix it because the suggested code simply doesn’t work. Cross-checking with official documentation remains necessary.

IDE Integration vs. AI-Native Editors

Code assistants are most useful when integrated directly into the code editor (like Visual Studio Code or IntelliJ), preventing the need to switch between the editor and a browser. Interestingly, there is a rise in AI-powered editors like Cursor, where AI is integrated into the editor itself rather than just being a plugin. The advantage here is that the editor can understand the entire project context better, offering more precise suggestions and answers.

2. AI-Powered Monitoring

This category arguably offers more immediate value to DevOps than code generation. Monitoring in Cloud and DevOps is complex and must be automated. When dealing with systems comprising thousands of servers and tens of thousands of components, manual observability is impossible.

Automated monitoring and alerting are essential to proactively detect abnormal behavior. However, configuring these alerts is a challenging task in itself.

Deep Analysis and Predictive Analytics

Tools like DataDog’s Watchdog utilize machine learning to address this complexity. They act as a built-in intelligence layer that continuously analyzes billions of data points from infrastructure and applications.

-

Root Cause Analysis: Once an issue is identified, troubleshooting can be time-consuming. By analyzing data on how services connect and correlate, AI can pinpoint exactly which component among thousands caused the error, saving engineers significant manual effort.

-

Predictive Analytics: By analyzing historical data and trends, these tools can identify potential issues before they occur, moving from reactive to proactive maintenance. This leverages the true strength of AI: analyzing massive datasets to find correlations that humans might miss.

3. CI/CD Pipeline Optimization

CI/CD pipelines are the heart of DevOps automation. Tools in this space are increasingly using AI to focus on developer productivity and workflow optimization.

TeamCity Pipelines by JetBrains is an example of a tool focusing on this area through Self-Tuning Pipelines.

-

Built-in Optimization: While building and configuring a pipeline, the platform provides intelligent suggestions on how to optimize it—for example, adding caching or running jobs in parallel to speed up execution. This removes the need to constantly switch back and forth to documentation.

-

Configuration as Code: For engineers who prefer scripting over UI configurations, modern tools allow you to configure the pipeline via the UI using these intelligent suggestions and then save the configuration, which automatically generates the YAML code in your Git repository. This bridges the gap between ease of use and the “Everything as Code” best practice.

4. Security (DevSecOps)

Security is another critical area where AI can help prevent issues before they happen by discovering vulnerabilities based on statistical data or abnormal behavior. Some tools even allow for “auto-fixes” where the system detects and repairs a misconfiguration automatically.

Sysdig is a prominent tool in this category. Similar to monitoring tools, it uses machine learning for proactive analysis, but with a focus on security, particularly in containerized environments.

The Challenge of Scale

In modern DevOps, containers are the standard. An environment may run thousands of containers across multiple systems. Ensuring that every single container and service is secure, not misconfigured, and free of vulnerabilities is impossible to do manually.

How AI Helps

-

Detection and Analysis: Tools like Sysdig automatically scan the environment to highlight potential threats.

-

Attack Path Visualization: They can visualize the specific path an attacker would take from an entry point to sensitive data.

-

Root Cause for Security: Just like with operational monitoring, identifying the source of a security breach is difficult. AI tools streamline the troubleshooting process by telling you exactly where the issue originates and how to fix it.

AI Workload Security

An emerging and vital feature is security specifically for AI workloads. It has been observed that a significant percentage of generative AI workloads running in Kubernetes clusters are publicly exposed. Tools are now designing features to monitor the security of the AI models themselves, ensuring they are not leaking sensitive data to external interactions.

5. Resource and Cost Management (FinOps)

Managing resources efficiently and saving costs is a major challenge, especially as applications scale and utilize multi-cloud environments.

AI tools such as CloudHealth, Usage AI, and Cast AI provide overviews of infrastructure efficiency and offer actionable recommendations.

-

Optimization: They identify which instances are underutilized or not used at all.

-

Right-Sizing: They recommend specific instance types or sizes to optimize performance and cost.

-

Predictive Scaling: Using predictive analysis on usage patterns, these tools can forecast how much infrastructure will be needed in the future. This helps in scaling environments dynamically to avoid paying for unnecessary resources.

-

Multi-Cloud Support: As many complex applications run on multi-cloud environments, having a unified view of workloads across different providers is crucial.

Conclusion

These constitute the most significant current use cases for AI in DevOps and Cloud.

Despite the hype, AI tools are not yet mature enough to fulfill the ambitious promise where engineers focus solely on high-level logic while AI handles all technical heavy lifting. That reality has not yet arrived. These are currently just another set of tools that require technical expertise to wield effectively. The fundamental knowledge of DevOps concepts and technologies remains essential; AI cannot replace that expertise.

However, in use cases like automated monitoring, security, and resource management—where the sheer volume of data and components exceeds human processing capacity—AI tools can massively increase productivity. They save time on troubleshooting and data analysis, allowing engineers to focus on more complex, value-driven engineering tasks.