It is difficult to articulate universal security rules for a container infrastructure because context is first and foremost. Protection must take into account what kind of system it is, what tasks it solves and in what environment. Otherwise, you can protect the containers as you like, but suffer from a vulnerability in the kernel or a human error in the system configuration.

Not so long ago I came across a service where they were very worried about data security. All key data applications were container-based and tuned by experienced engineers. We thought about the competent configuration of containers, monitoring authorization, regular updates, blocking by the blacklist of IP addresses, and so on. But the zero-day vulnerability was discovered outside of the applications, on the mail server. As a result, attackers could read any user emails and used this information against the company. Despite the efforts of the developers, all security turned out to be equal to the weakest link in the infrastructure.

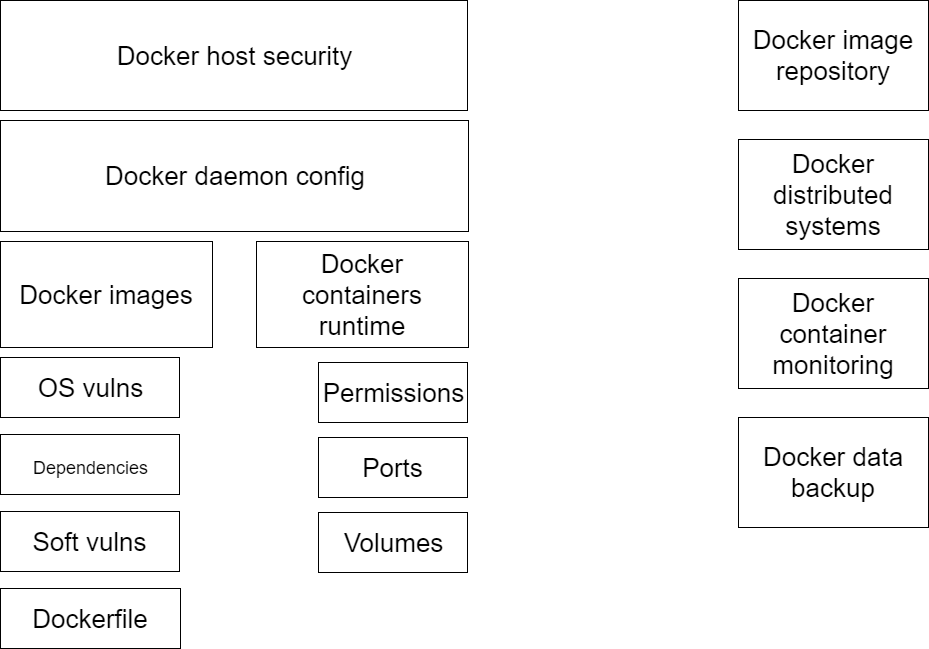

To make it easier to build protection from all sides, you need a tool to analyze possible problems. For example, the threat model for containerized systems is suitable here:

On the one hand, we consider the risks that relate to containers and the host. On the other hand, we analyze external components that affect the security of a containerized system. The outer layer includes the network and firewalls, the proactive defence system, all kinds of WAFs, as well as the repository where Docker is located, and the images of the distributed system.

Let’s see what problems arise at different levels of this diagram.

“Internal” vulnerabilities

Host vulnerabilities. Host security is a vast topic. Formally, “problems are not always on our side”. But threats at this level remain some of the most unpleasant.

Many believe that hosting a service provider in cloud infrastructure will solve this problem. But the provider can only offer additional information security tools: filtering traffic to the host using firewalls, scanners. The ultimate security of the system remains on the client’s conscience.

Here are some of the problems you may encounter on the host:

- Host infection due to lack of antivirus.

- Vulnerabilities in older versions of software that were not updated in time.

- Incorrect application settings on the host.

Many problems will be protected by regular testing with OpenSCAP or other SCAP-compatible vulnerability scanners.

Docker Daemon configuration errors. I will highlight the Docker Daemon configuration as a separate item. Configuring the daemon by default is often insecure and can create an additional attack vector, for example, if, when using a distributed system, we need to open access to the Docker socket file over the network. By default, it has no protection, no authorization and can open access to absolutely the entire system.

There are even stupidly simple mistakes: they protected the network with a firewall, but did not save its settings – and after a restart, the system is captured.

Docker images level vulnerabilities. Problems at this level most often arise at the stage of building images. What can happen:

- Risks of ICC – internal communication of Docker containers. At the level of firewall settings, containers communicate within the same bridge network. If ICC is enabled, then with access to one of the containers we will be able to see the neighbors. So, if the communication of containers with each other is not vital, it is worth prohibiting it.

- Vulnerabilities in the base image and system packages are included in this image.

For example, libraries with vulnerabilities may appear inside the container. - Vulnerabilities in third-party dependencies that are pulled from the outside and used in applications.

More than once I saw a situation when a container was taken from somewhere from the network, added to the application, opened access to it outside – and the very next day we found a pool of unnecessary processes running there.

At the same time, the container itself was legitimate, simply not updated. And then various scanners come into play.

- Problems and vulnerabilities in the code of the applications themselves.

- Dockerfile vulnerabilities: Incorrect and unsafe instructions that can be used when building an image. For example, we create a new user within the Docker file and then release content from this user.

- Vulnerabilities in the Docker Daemon itself, which can play a role when a container is compromised.

Runtime configuration problems. During runtime, seemingly non-critical vulnerabilities may appear. But in combination with other factors, more serious threats are obtained. What happens:

- Incorrect and over-granting of privileges to containers.

- Overly open network ports. Even if the ports are not forwarded outward, it can become a risk with ICC enabled, when containers communicate with each other.

- Insecure use of shared volumes. In particular, this includes the specific “docker-in-docker” mechanism that is needed during the build phase. In this situation, when you gain access to the repository, it is theoretically possible to change the tasks and, thanks to excessive collector privileges, gain additional access rights.

I observed this situation: there is a MongoDB database, the port is open only for the database, access is exclusively by authorization. But in the database itself, ports are open for admin panels and other systems. The problem is that there are quite a few utilities in the database admin area, and theoretically through it you can get full access to the system. If at the same time the container is running as root, then this is already a tangible risk.

Influence of external components

Image repositories. Here, problems often relate to the secure storage and delivery of images from the repository to the target system. There is a risk of encountering container replacement.

Ideally, you should limit yourself to two or three trusted repositories. But this is not always convenient in systems around a production environment: external images are often used on a test or stage. In this case, we use the Docker Content Trust (DCT): we sign the containers with an electronic signature and configure the hosts to work only with correctly signed images.

Distributed systems. Here the main risk is too open access to the Docker socket (I just wrote about this above).

In the Enterprise segment, I have come across quite a few instructions that are aimed at quickly launching software, but do not make important reservations about information security. For example, in one of the steps, you need to open access to the Docker container over TCP / IP. At the same time, the firewall is configured by default, and the need to protect the Docker socket is not spelled out in the instructions. In 5 minutes, while the TCP port is open, automated bots have time to scan it. At best, miners are installed there, at worst – some kind of malware. Even when we close the port and configure the firewall, a vulnerability remains in the system. Do not do it this way.

Monitoring a containerized system. The monitoring system will help us quickly respond to abnormal behavior of some parameters. It is important to track the performance of both containers and the entire system. In addition to monitoring, you can connect log analytics, for example, to isolate atypical queries, and so on.

Backing up data from a containerized system. In addition to the backup itself, the protection of the stored data is also important.

We had a client – a fairly popular service in the competitive field. It is not known if this was a targeted attack, but in the end, hackers gained access to the containerized system. The containers themselves had some protection. But data backups were made on S3, and it was possible to get key data from the containers and get full access. At the same time, the policies themselves for recording the backup did not forbid deletion.

Further a matter of technology. First of all, the attackers replaced the data backups with some kind of garbage. Only after that they destroyed the system and began to blackmail the owners: “You transfer bitcoins to us, and then, perhaps, we will return something to you.”

Final safety rules

1. Restrict access to the Docker Daemon socket. The socket file must be owned by the root user. It should be understood that any user with access to the socket can access the system. And you can prohibit this only if you prohibit mounting files from certain directories.

2. Do not pass the socket into the container (Docker-in-Docker). Instead of Docker-in-Docker, you can use kaniko for building, a system that allows you to build an image without using Docker. In this case, you can use a third-party system to sign images and connect it through the API.

3. Remember to configure Docker TCP authorization. We configure authorization through an SSL certificate, that is, we use public and private keys and signed keys in a hierarchy. This is how it is all implemented in Kubernetes.

4. Configure an unprivileged user. Containers are always launched from an unprivileged user. If we are talking about Docker in its purest form, we simply indicate the user’s UID and launch the container.

docker run -u 4000 alpine

During container assembly, we create a user and switch to it.

FROM alpine RUN groupadd -r myuser && useradd -r -g myuser USER myuser

We also enable user namespace support in the Docker daemon.

–userns-remap = default

Alternatively, you can do this in a json file that is specific to Docker.

5. Disable all capabilities of the kernel. For example, like this:

docker run –cap-drop all –cap-add CHOWN alpine

6. Disable escalation of privileges (change of user to uid0).

–security-opt = no-new-privileges

7.Disable inter-container communication. By default, this is possible through the docker0 network, an option when starting the Docker daemon:

–icc = false

8. Limit resources. This is how we reduce the risk of unauthorized mining:

-m or –memory – available memory before OOM;

–cpus – how many processors are available, for example, 1.5;

–cpuset-cpus – you can specify which processors are available (cores);

–restart = on-failure: <number_of_restarts> – remove the Restart Always option to control the number of restarts and detect problems in time;

–read-only – the file system is configured for read-only at startup, especially if the container is serving static.

9. Do not disable security profiles. By default, Docker already uses profiles for Linux security modules. These rules can be tightened, but not vice versa.

Separately, I will say a few words about seccomp – the Linux kernel mechanism that allows you to determine the available system calls. If an attacker is able to execute arbitrary code, seccomp will prevent him from using system calls that were not previously allowed. In the standard distribution, Docker blocks about 44 calls out of 300+.

Besides Seccomp, you can also use AppArmor or SELinux profiles.

10. Analyze the contents of the container. There are tools for detecting containers with already known vulnerabilities: free Clair, shareware Snyk, anchore, paid JFrog XRay and Qualys. For free vulnerabilities can also be considered in Harbor: it searches for known exploits and reports on the reliability of the container. You can also use information security assessment systems in general, for example, Open Policy agent.