While we are developing and running our application in Docker Desktop, everything is quite simple and clear, but when it comes time to deploy it in the cloud, there are significantly more questions. Deploying even a simple application from multiple containers has to be planned in advance. Therefore, today, using the example of Amazon Web Services, we will analyze what options there are for running containerized applications in the cloud and (spoiler!) set up a Kubernetes cluster in AWS in two different ways.

The information is relevant for those who already have a subscription to AWS services and for those who believe that the restrictions on new user subscriptions will not last too long.

Choosing an orchestration system

We rent a server, install the OS and all the necessary software, and then manually transfer all our containers to it using Amazon Elastic Compute Cloud (EC2) virtual machines. This solution will work well, especially if you have completed the Kubernetes The Hard Way course and want to apply everything you see in a real project. Of course, it is impossible to forbid you to do this, but if this is your first project, I advise you to wait and get some bumps in test environments or with some non-critical applications. Plus, choosing this path, we lose all cloud bonuses. It will be such a creative collocation, only you didn’t buy the server and brought it to the data processing center (DPC), but rented it.

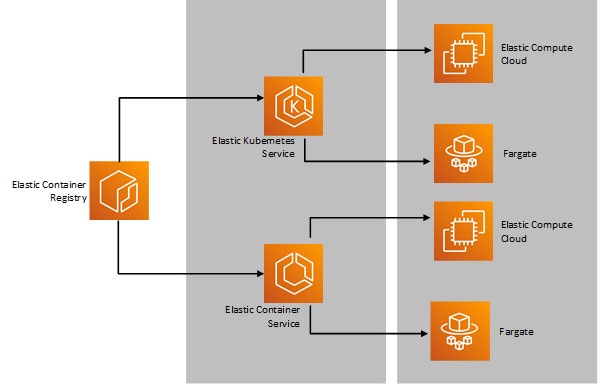

The further plan of our actions will depend on which orchestration system we choose. In AWS, there are essentially two of them:

- ECS (Elastic Container Service) – Amazon’s own development;

- EKS (Elastic Kubernetes Service) – Kubernetes adapted for AWS.

Of course, there are much more orchestration systems in nature: Swarm, Mesos, Nomad, Rancher, “Your_favorite_system”, but in AWS, if we do not take the option with simple EC2, we actually choose between ECS and EKS. OpenShift stands apart, but this is a story for a separate article.

You may also see ECR – Elastic Container Registry mentioned , but this is just a container image repository, similar to Docker Hub, Harbor, Nexus, etc. This is not important, but keep in mind that ECR is part of the AWS ecosystem, which means that integrating it with EKS will be somewhat easier and possibly cheaper.

Why are we talking about an orchestration system at all? Because if you have more than one containers, you will need a tool that will manage their launch, scaling and other daily tasks.

How not to make a mistake with the choice – look at the diagram:

Choosing hosting

After you have decided on the orchestration system, you need to choose the type of hosting. There are two options:

- EC2 ;

- Fargate .

In the case of using ECS with EC2, smart AWS itself will raise the required number of EC2 virtual machines, launch the necessary services on them:

- container runtime,

- ecs agent (to communicate with the control plane) and then your containers.

The control plane will be in the area of responsibility of AWS, but the management of EC2 virtual machines falls on your shoulders. You will see them in the console and can connect to them like any other virtual machine, and add or remove additional EC2 machines in the process.

If we want to give AWS control not only the control plane, but also the virtual machines on which the containers will run, then we can use the Fargate service. In this case, in the AWS console, you will not see specific EC2 machines, but only quantitative indicators of the resources involved. Fargate analyzes the parameters of your container: how much memory, cores, disk space it needs – and then presents server capacities for your specific request.

At the same time, you do not have access to the server. This is convenient if you do not have the ability or desire to spend time and effort managing virtual servers. In addition, you pay only for the resources you use, and when you need to scale the solution, you can easily do it. Whereas in the EC2 version, you will have to pay for the entire virtual machine.

ECS integrates quite well with other AWS services: IAM, balancing, cloudwatch, etc. However, for better or worse, Kubernetes is now the de facto orchestration standard, which is why AWS has its own implementation of this solution called EKS.

Choose between EKS and ECS

EKS and ECS both manage the control plane, however there are a few important differences. When choosing between them, consider the following:

ECS:

- easy to set up and suitable for simpler applications or the very beginning of working with containers;

- most likely to be cheaper;

- works only in AWS, which means that migration to ECS will be problematic;

- if you don’t like something, then migrating somewhere else will be just as difficult.

EKS:

- uses the same API as any other K8s, and if you already have experience with it, then you do not have to learn everything from scratch;

- open source! (We still love open source, don’t we?);

- it will be relatively easy to migrate from similar solutions to Google cloud, Azure or Digital ocean, because there is exactly the same K8s;

- the general prevalence of the solution is also an important point, there is someone to ask if something goes wrong.

The details are clear, but what exactly makes EKS better than the regular bare metal version?

Firstly, EKS will deploy the control plane for you with all the necessary services that are on the Master Node. Specialists responsible for migration can be pleased – Kubernetes the hard way can be postponed until better times.

Secondly, the Master Node created in this way (that is, each of its components) will be replicated through availability zones in your selected region, which means it will provide high availability and fault tolerance out of the box. This is extremely convenient, because ensuring Kubernetes fault tolerance is not as simple a task as it might seem at first glance: each control plane service requires its own approach.

We provide fault tolerance

So, how do we provide fault tolerance? You need to connect the Worker Node to the Master Node, where your containers will be launched, at this stage several options again appear. Worker Nodes are regular EC2 servers that you will connect to the Master Node, just like any other Kubernetes cluster.

The first option is to use regular EC2 virtual machines, which we will install, update and manage ourselves.

The second is the so-called Nodegroup , which will create and delete specific EC2 instances for you depending on your needs, as well as install the necessary components for working with Kubernetes there, but you still have to configure them.

The third option is to use Fargate, similar to what was suggested in ECS. In this case, AWS will take care of resource allocation.

At the same time, it is important to remember that in the case of EKS, you will not have to stop at any one option. Some containers can be run on EC2, and some on Fargate.

The deployment option is up to the system architect to choose. Each of them, as we see, has its own advantages and disadvantages. In the next article, we will look at how to deploy a simple Kubernetes cluster based on EKS. Need help? contact [email protected]