Containers are isolated black boxes. If they are working, it is easy to forget which programs of which versions are running inside. A container can do its job perfectly from an operational point of view, while using vulnerable software. These vulnerabilities can be fixed long ago in the upstream, but not in your local image. If appropriate measures are not taken, problems of this kind may stay unnoticed for a long time.

Best practices

Representation of containers in the form of immutable atomic parts of the system is reasonable from an architectural point of view, however, to ensure safety, their contents should be regularly checked:

To get the latest vulnerability fixes, regularly update and rebuild your images. Of course, do not forget to test them before sending to production.

Patching running containers is simply not good. It is better to rebuild the image with each update. Docker has a declarative, efficient, and easy-to-understand build system, so this procedure is actually simpler than it seems at first glance.

Use software that regularly receives security updates. Everything that you install manually, bypassing the repositories of your distribution, you must continue to update yourself.

Gradual rolling updates without interrupting the service are considered a fundamental property of the model for building systems using Docker and microservices.

User data is separated from container images, making the update process safer.

Do not complicate. Simple systems require less updates. The fewer components in the system, the smaller the attack surface and the easier updates. Break containers if they become too complex.

Use vulnerability scanners. There are plenty of them now – both free and commercial. Try to get more information about the events related to the security of the software you use, subscribe to mailing lists, notification services, etc.

Make security scanning a mandatory step in your CI / CD chain, automate whenever possible – do not rely only on manual checks.

Examples

Many Docker image registries offer an image scanning service. For example, choose CoreOS Quay, which uses the open-source Docker image security scanner called Clair. Quay is a commercial platform, but some services are free. You can create a trial account by following these instructions.

After registering an account, open Account Settings and set a new password (you will need it to create repositories).

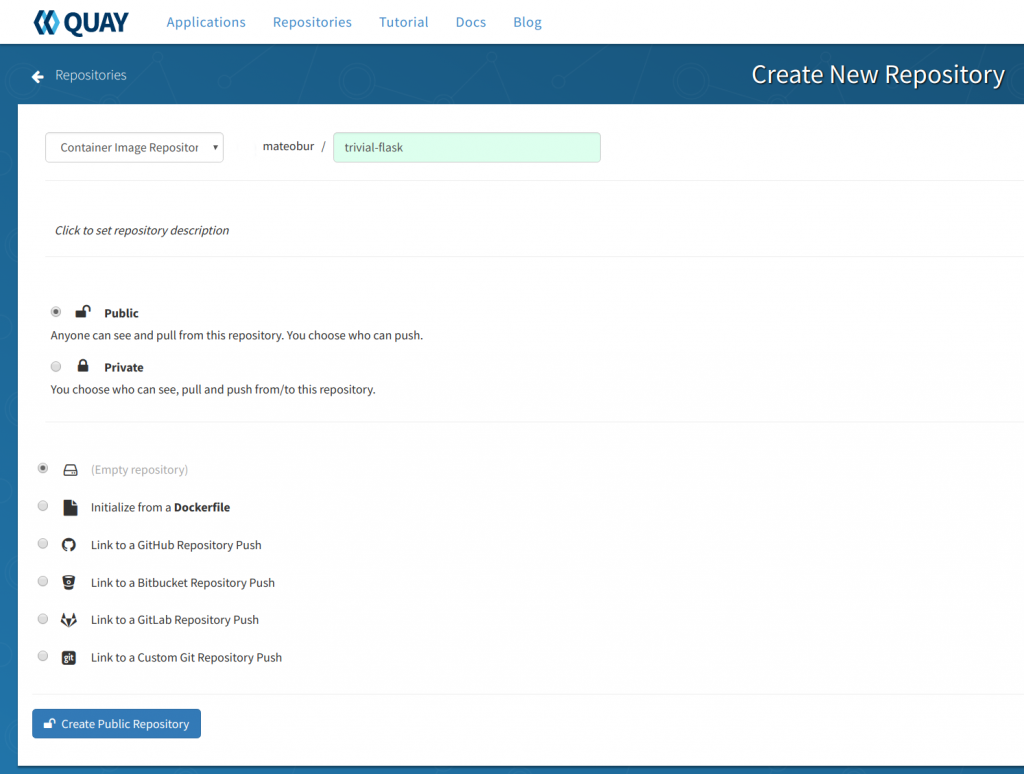

Click + in the upper right corner and create a new public repository:

Here we will create an empty repository, but as you can see in the screenshot, there are other options too.

Now, log in to Quay from the console and upload the local image there:

# docker login quay.io # docker push quay.io/<your_quay_user>/<your_quay_image>:<tag>

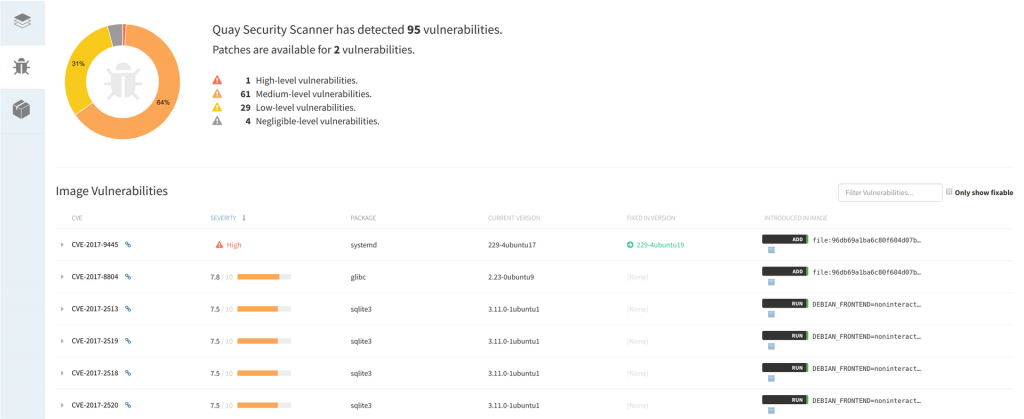

If the image has already been uploaded, you can click on its ID and see the results of the security scan, sorted in descending order of vulnerability, which have CVE and versions of packages containing fixes.

Docker credentials and secrets

In most cases, programs require confidential data for normal operation: hashes of user passwords, certificates, encryption keys, etc. This situation is aggravated by the nature of containers: you do not just deploy the server, but set up an environment in which micro services can be constantly created and destroyed. In this case, an automatically working, reliable and secure process for using confidential information is required.

Best practices

Do not use environment variables to keep secrets. This is a common and unsafe practice.

Do not keep secrets in container images. Read this report about finding and fixing a vulnerability in one of the IBM services: “The private key and certificate were mistakenly left inside the container image.”

If you have a fairly sophisticated system, deploy Docker credential management software. Try to create your own secret storage (by loading secrets using curl, mounting volumes, etc., etc.) only if you know very well what you are doing.

Examples

First, let’s see how environment variables are intercepted:

# docker run -it -e password='S3cr3tp4ssw0rd' alpine sh / # env | grep pass password=S3cr3tp4ssw0rd

So, this is elementary, even if you switch to a regular user using su:

/ # su user / $ env | grep pass password=S3cr3tp4ssw0rd

Container orchestration systems currently include tools for managing secrets. For example, Kubernetes has objects of type secret. Docker Swarm also has its own secrets functionality, which we will now demonstrate:

Initialize the new Docker Swarm (you might want to do this in a virtual machine):

# docker swarm init --advertise-addr <your_advertise_addr>

Create a file with any text – it will be your secret:

# cat secret.txt This is my secret

Based on this file, create a new secret resource:

# docker secret create somesecret secret.txt

Create a Docker Swarm service with access to this secret (you can change uid, gid, mode, etc.):

# docker service create --name nginx --secret source=somesecret,target=somesecret,mode=0400 nginx

Enter the nginx container – you should be able to use the saved secret:

root@3989dd5f7426:/# cat /run/secrets/somesecret This is my secret root@3989dd5f7426:/# ls /run/secrets/somesecret -r-------- 1 root root 19 Aug 28 16:45 /run/secrets/somesecret

The capabilities of the secret management system are not finishing here, but the above example will allow you to start keeping secrets properly and work with them from one control point.