By the end of this article, you’ll know not only what Nginx is, but also why it was created and what it’s used for, with tons of real-world examples that make the whole thing finally click.

Back When the Web Was Simple

In the early days of the web, life was easy. Fewer users, fewer websites, fewer headaches. A browser requested a webpage from a single web server. That web server was literally just a machine with some software installed on it — software that assembled a webpage and sent it back to the browser, which then showed it to the user.

This piece of software running on a server machine, responding to browser requests?

Yep — that was Nginx. A server application built to respond to HTTP requests.

Then the Web Got Popular (And Things Got Messy)

Suddenly websites weren’t dealing with hundreds of requests — they were dealing with thousands, even millions.

Try to imagine one server handling millions of requests.

Spoiler: it can’t. That’s way beyond what a single machine or a single web server process can manage.

So what did we do?

We added more servers. Say, ten Nginx web servers.

But then another question popped up: how do we decide which server should handle each incoming request?

That’s where load balancing enters the scene.

Nginx as a Load Balancer (AKA the Traffic Concierge)

The same Nginx that acted as a web server could also be configured to act as a load balancer — a proxy that accepts incoming browser requests and forwards them to one of the backend servers.

“Proxy” simply means doing something on someone else’s behalf.

Nginx accepts the request on behalf of the backend servers. Like a concierge at the front desk directing guests to the right room.

How It Distributes Traffic

Depends on the algorithm you configure:

-

Least Connections: The server with the fewest active requests wins.

-

Round Robin: The classic — each server gets a request in a cyclical pattern.

Simple logic, big impact.

At this point, Nginx is functioning as both:

-

A web server, and

-

A proxy / load balancer

Same technology, different tasks.

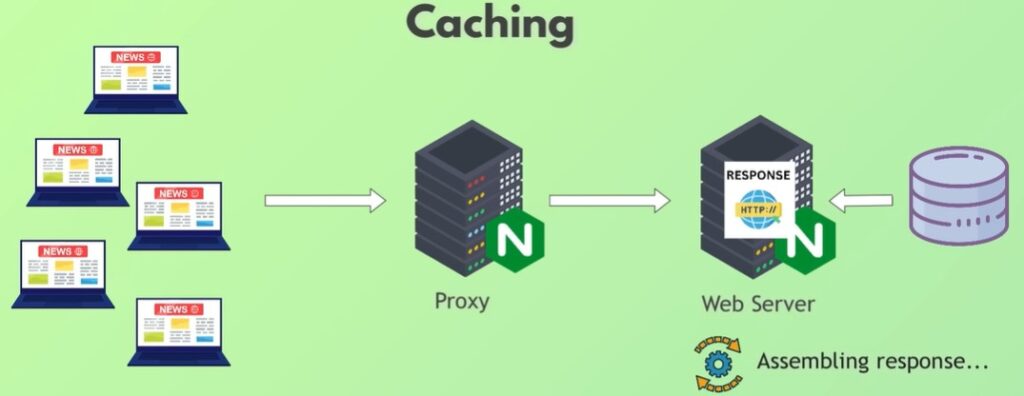

Caching: Serving Millions Without Melting Down

Picture this: The New York Times publishes a new article. Millions of people open it within minutes.

Imagine if every request had to go to a backend server that:

-

pulled images from storage,

-

queried the database,

-

built the HTML,

-

assembled links,

-

packaged the response,

-

and sent it back…

A million times.

That’s a disaster.

Much better approach?

Assemble the article once, store the final version, and serve that copy to everyone.

That’s caching, one of Nginx’s most important proxy features.

It avoids hitting your database and backend servers over and over.

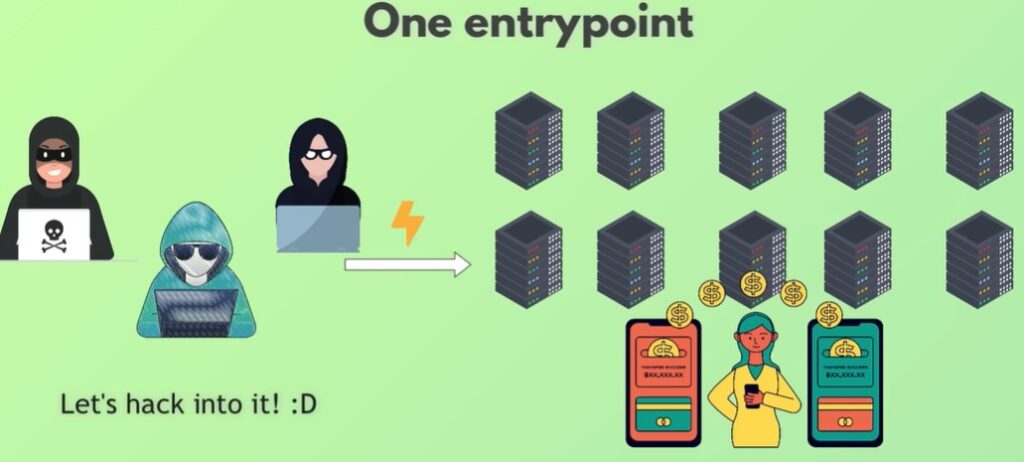

Security: Reducing the Attack Surface

Let’s level up: imagine you run a large banking app or something like Facebook, with 100 backend servers humming away.

These servers are a juicy target for hackers.

If you expose all 100 servers to the public internet, you’re basically inviting trouble. A hacker only needs a vulnerability in one of those servers — one forgotten library update — and they could break into the entire system.

Better solution?

Expose only the Nginx proxy to the public and hide all backend servers behind it.

Now instead of securing 100 public servers, you secure one.

Attack surface reduced dramatically.

Nginx becomes a shield — a protective layer between the outside world and your internal infrastructure.

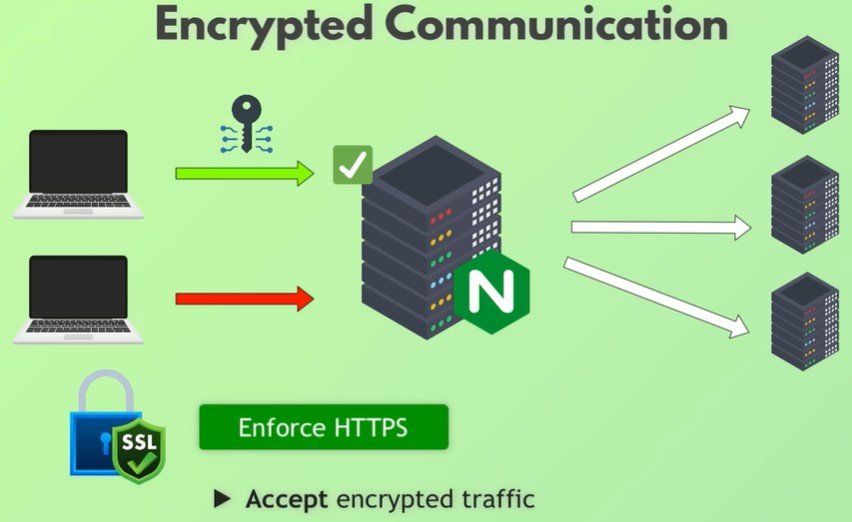

More Security: Encrypted Traffic and SSL

Since we have just one entry point now, we can enforce strict encryption.

The frontend sends encrypted messages to the Nginx proxy. Even if someone intercepts the traffic, they can’t read it.

Now, depending on your setup:

-

Nginx can decrypt the message itself, or

-

For extra security, Nginx simply forwards the encrypted message to the backend server, which decrypts it internally.

Nginx can also be configured to reject all non-encrypted requests and only accept HTTPS.

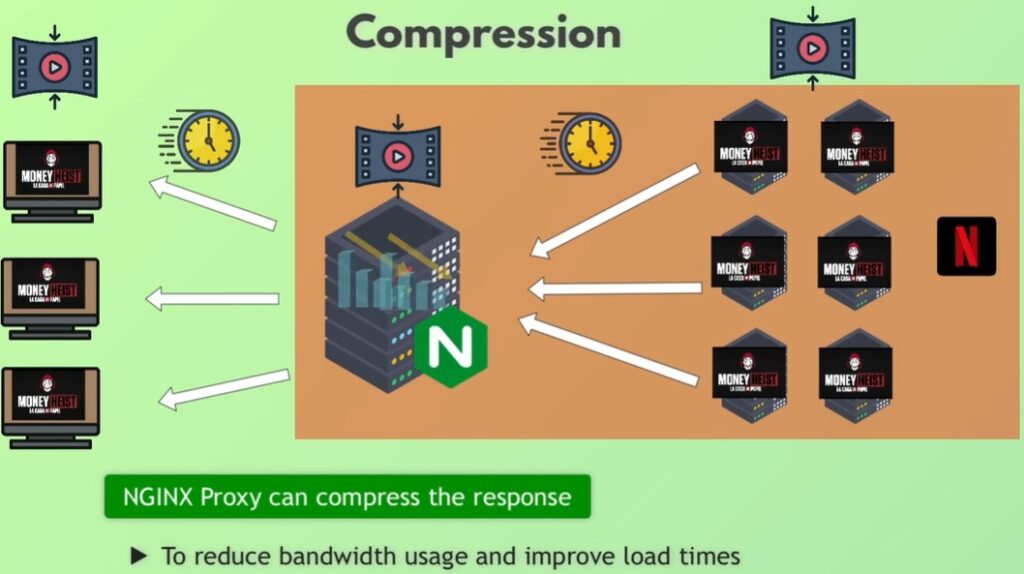

Compression: Think About Netflix at 7 PM

Yes — Netflix uses Nginx.

Imagine it’s evening in New York and a new hit show drops. Millions of users open Netflix at the same time. Suddenly Nginx must deliver massive HD video files to everyone.

Sending huge files to millions of people?

That’s a bandwidth nightmare.

Solution? Compression.

Nginx can compress:

-

large images

-

video chunks

-

other heavy assets

This reduces bandwidth both for the client and the server.

It also supports chunked transfer encoding — sending files in parts so you can start watching before the whole file arrives.

Configuring Nginx: How It Knows What to Do

Everything Nginx does is controlled through its configuration file, using directives that specify:

-

whether it acts as a web server or proxy

-

which port it listens on

-

caching rules

-

SSL certificates

-

load balancing logic

-

file locations

-

request routing

Examples include:

-

A simple web server on port 80

-

Redirecting all port 80 traffic to HTTPS

-

HTTPS server listening on 443 with SSL cert locations

-

Load balancer config with your chosen algorithm

-

Caching with fine-grained time-to-live settings

The configuration is extremely flexible and granular, which is part of the reason Nginx became so widely used.

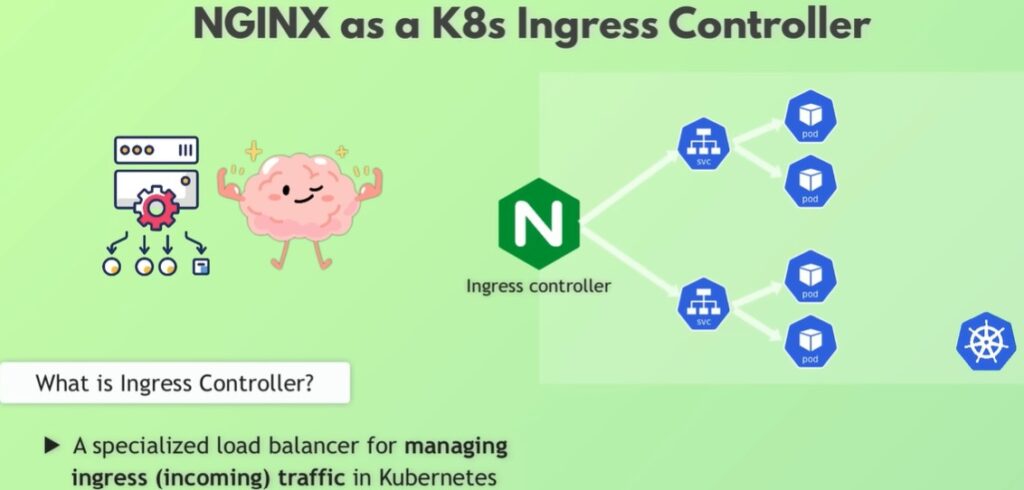

Nginx in the Container Era: Kubernetes Ingress Controller

Nginx isn’t just for servers anymore — it’s huge in the container world.

In Kubernetes, Nginx is one of the most popular Ingress Controller implementations.

An Ingress Controller is basically a proxy with advanced load balancing for Kubernetes clusters.

The logic is the same as before — but applied inside the cluster:

-

It receives traffic first,

-

Decides where it should go,

-

And forwards it to the correct service.

For example:

-

/cartroutes to the cart microservice -

/paymentroutes to the payment service -

/profileroutes to user profile service

Cloud load balancers (like AWS ELB) handle public traffic, then forward it to the Ingress Controller, adding a crucial extra security layer.

What About Apache? How’s It Different?

Apache was the king before Nginx arrived. It did the same thing — web server, later proxy — and was widely used.

But Nginx had several advantages:

-

Much faster

-

Lightweight

-

Excellent at serving static content

-

Easier config

-

Perfect fit for containers

That’s why Nginx exploded in popularity.

Wrapping Up

So now you have a complete, clear, real-world picture of Nginx:

-

It’s a web server.

-

It’s a proxy.

-

It’s a load balancer.

-

It’s a caching layer.

-

It’s a security shield.

-

It compresses content.

-

It acts as a Kubernetes Ingress Controller.

All in one insanely fast, flexible piece of software.