Unikernel

The development of virtualization technologies has allowed us to move to cloud computing. Hypervisors like Xen and KVM started to be the foundation for what we now know as Amazon Web Services (AWS) and Google Cloud Platform (GCP). And although modern hypervisors are able to work with hundreds of virtual machines combined into a single cluster, traditional general-purpose operating systems are not too adapted and optimized to work in such an environment. General purpose, what OS is intended, first of all, to support and work with as many various applications as possible, therefore their kernels include all kinds of drivers, libraries, protocols, schedulers and so on. However, most virtual machines that are now deployed somewhere in the cloud are used to run a single application, for example, to provide DNS, a proxy, or some kind of database. Since such a single application relies in its work only on a specific and small part of the OS kernel, all its other parts just waste system resources, and by the fact of their existence they increase the number of vectors for a potential attack. Indeed, the larger the code base, the more difficult it is to eliminate all the shortcomings, and the more potential vulnerabilities, errors and other weaknesses. This problem encourages specialists to develop highly specialized operating systems with a minimum set of kernel functionality, what means to create tools to support one specific application.

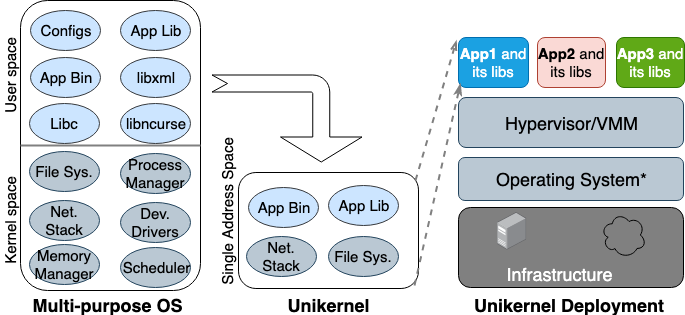

For the first time, the Unikernel idea appeared in the 90s. Then it took shape as a specialized image of a machine with a single address space that can work directly on hypervisors. It packs the core and kernel-dependent applications and functions into a single image. Nemesis and Exokernel are the two earliest research versions of the Unikernel project. The packaging and deployment process is shown on the picture below.

Unikernel breaks the kernel into several libraries and puts only the necessary components into the image. Like regular virtual machines, unikernel deploys and runs on the VM hypervisor. Due to its small size, it can load quickly and also scale quickly. The most important features of Unikernel are increased security, small place in storage, a high degree of optimization, and fast loading. Since these images contain only application-dependent libraries, and the OS shell is not available if it was not connected purposefully, the number of attack vectors that attackers can use on them is minimal.

It is not only difficult for attackers to gain an access in these unique cores, but their influence is also limited to one core instance. Since the size of Unikernel images is only a few megabytes, they are downloaded in tens of milliseconds, and literally hundreds of instances can be launched on a single host. Using memory allocation in the same address space instead of a multilevel page table, like in most modern operating systems, unikernel applications have a lower memory access delay compared to the same application running on a regular virtual machine. Because applications come together with the kernel when building the image, compilers can simply perform static type checking to optimize binary files.

Unikernel.org maintains a list of unikernel projects. But with all its distinctive features and properties, unikernel is not widely used. When Docker acquired Unikernel Systems in 2016, the community decided that the company would now pack containers in them. But three years have passed, and there are still no signs of integration. One of the main reasons for this slow implementation is that there is still no mature tool for creating Unikernel applications, and most of these applications can only work on certain hypervisors. In addition, porting an application to unikernel may require manually rewriting code in other languages, including rewriting dependent kernel libraries. It is also important that monitoring or debugging in unikernels is either impossible or have a significant impact on performance.

All these restrictions prevent developers from switching to this technology. It should be noted that unikernel and containers have many similar properties. Both the first and second are highly focused immutable images, which means that the components inside them cannot be updated or fixed, that is, you always have to create a new image for the application patch. Today, Unikernel is similar to the ancestor of Docker: then the container runtime was not available, and developers had to use the basic tools for building an isolated application environment (chroot, unshare and cgroups).

Ibm nabla

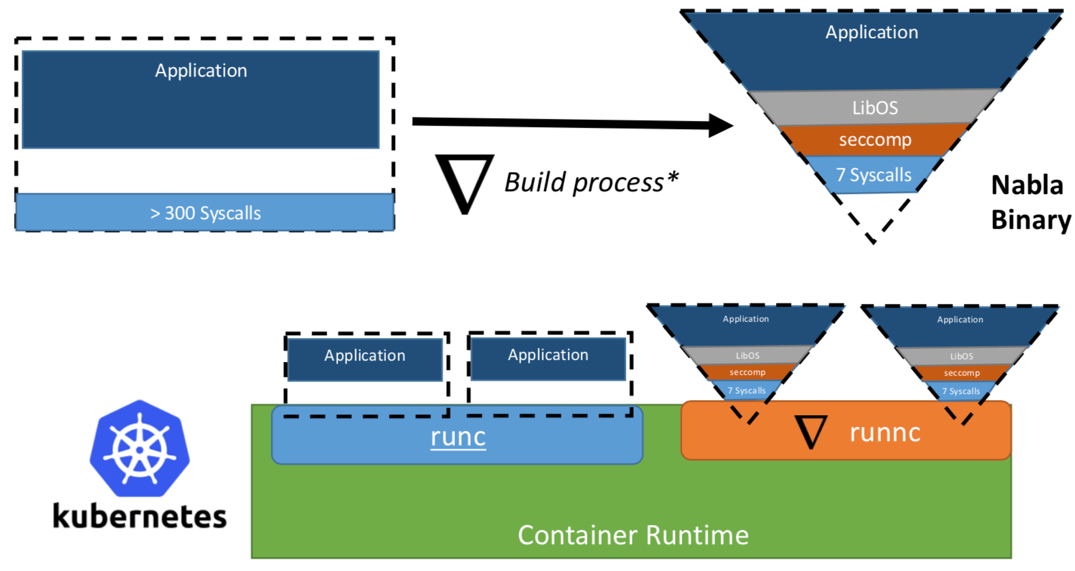

Once, researchers from IBM proposed the concept of “Unikernel as a process” – the unikernel application that would run as a process on a specialized hypervisor. The IBM project “Nabla containers” strengthened the security perimeter of unikernel, replacing the universal hypervisor (for example, QEMU) with its own development called Nabla Tender. The rationale behind this approach is that calls between unikernel and the hypervisor still provide the most attack vectors. That is why the use of a hypervisor dedicated to unikernel with fewer allowed system calls can significantly strengthen the security perimeter. Nabla Tender intercepts calls that unikernel routes to the hypervisor, and already independently translates them into system requests. At the same time, the seccomp Linux policy blocks all other system calls that are not needed for Tender to work. Thus, Unikernel in conjunction with Nabla Tender runs as a process in the user space of the host. The picture below shows how Nabla creates a thin interface between unikernel and the host.

The developers claim that Nabla Tender uses less than seven system calls in its work to interact with the host. Since system calls serve as a kind of bridge between processes in the user space and the kernel of the operating system, the less system calls are available to us, the smaller is the number of available vectors for attacking the kernel. Another advantage of running unikernel as a process is that you can debug such applications using a large number of tools, for example, gdb.

To work with container orchestration platforms, Nabla provides a dedicated runnc runtime that implements the Open Container Initiative (OCI) standard. The latter defines an API between clients (e.g. Docker, Kubectl) and a runtime environment (e.g., runc). Nabla also comes with an image constructor that runnc will later be able to run. However, due to differences in the file system between unikernels and traditional containers, Nabla images do not meet the OCI image specifications and, therefore, Docker images are not compatible with runnc. At the time of writing, the project is still in the early stages of development. There are other restrictions, for example, the lack of support for mounting / accessing host file systems, adding several network interfaces (necessary for Kubernetes), or using images from other unikernel images (for example, MirageOS).